![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

![]()

Archives & Museum Informatics

2008 Murray Ave.

Suite D

Pittsburgh, PA

15217 USA

info@archimuse.com

www.archimuse.com

| Search A&MI |

Join our Mailing List.

Privacy.

Evaluating the Usability of a Museum Web Site

Ilse Harms and Werner Schweibenz, University of Saarland, Saarbrücken, Germany

Abstract

The paper presents a research project conducted by the Department of Information Science in cooperation with the Saarland Museum, the art museum of the Federal State of Saarland, Germany. The study had two aims. The first was to evaluate some methods of usability engineering for the Web, and the second was to evaluate the usability of the Saarland Museum's Web site and improve it. The applied usability engineering methods were an expert-judgment-focused evaluation using heuristic evaluation with the Heuristics for Web Communication and a user-focused evaluation conducting a laboratory test with actual users and the thinking-aloud method. The combination of heuristic evaluation and laboratory testing provided interesting results. The heuristic evaluation detected a vast number of usability problems. The laboratory test confirmed most of these findings as usability problems and added some usability problems that experts did not discover because actual users often have a different perspective. The evaluation led to a re-design of the Web site.

Keywords: Usabilty engineering, Web site usability, heuristic evaluation, user friendly museum Web pages, evaluation of museum Web sites

1. Usability Engineering for Museum Web Sites

The World Wide Web offers museums the possibility to disseminate information about their collections to a world wide public. Therefore, one can state that "museums are in the communication business" (Silverstone 1988: 231) and that there are interesting parallels between museums and the mass media (Schweibenz 1998: 187). When attributing communicative and interactive functions to a museum Web site, it is especially important to keep in mind the users and their needs for easy interaction. Therefore it is essential that "the Web is a domain which must be instantly usable" (Rajani & Rosenberg 1999). This statement evokes the question of what usability means for museum Web sites. According to Garzotto, Matera & Paolini (1998), usability is "the visitor's ability to use these sites and to access their content in the most effective way. As a consequence, it has become compelling to provide both quality criteria that WWW sites must satisfy in order to be usable, and systematic methods for evaluating such criteria." Therefore Web usability and Web usability engineering methods have become an important issue (Garzotto, Matera & Paolini 1998, Teather 1999, Cleary 2000).

The usability of Web sites can be tested and improved in a process which is called usability engineering. According to Krömker (1999: 25), usability engineering is a set of methods to design user-friendly products and enhance the quality of the product. The methods of usability engineering can be categorized in expert-focused and user-focused methods. Expert-focused methods like heuristic evaluation and user-focused evaluation methods like laboratory testing with actual users can be used in combination (Nielsen, 1997a: 1543). Undisputedly, the combination of heuristic evaluation and laboratory testing achieves the greatest value from each method (Kantner & Rosenbaum 1997: 160; Nielsen, 1993: 225). Therefore, the Department of Information Science at the University of Saarland, Germany, developed a usability engineering process (Harms & Schweibenz, 2000: 19-20) and tested it in a usability study evaluating a museum Web site. This paper presents the experiences from the study which was conducted with an expert-judgment-focused evaluation using heuristic evaluation based on the Heuristics for Web Communication and a user-focused evaluation using laboratory tests with actual users and the thinking-aloud method.

2. A Survey of Methods for Usability Engineering

The methods of usability engineering can be categorized into expert-focused and user-focused methods. Among the expert-focused methods are several variations of heuristic evaluation. According to Nielsen (1997a: 1543) "heuristic evaluation is a way of finding usability problems in a design by contrasting it with a list of established usability principles". The established usability principles are listed in guidelines or checklists like Keevil's Usability Index (Keevil, 1998) or Molich & Nielsen's nine principles for human-computer dialogue (Molich & Nielsen, 1990) or the Heuristics for Web Communication. In the evaluation process, experts compare the product with these guidelines and judge the compliance of the interface with recognized usability problems. The advantage of expert-focused evaluation is that it is a relatively simple and fast process. A comparatively small number of five evaluators can find some 75 per cent of the usability problems of a product in a relatively short time (for details see Levi & Conrad, 1996). The disadvantages are that experts have to do the evaluation and that experts cannot ignore their own knowledge of the subject, i.e. they cannot "step back behind what they already know." So they will always be surrogate users, i.e. expert evaluators who emulate users.

In contrast to expert-focused methods, user-focused methods rely on actual users to test the usability of a product. This process is called user testing, and according to Nielsen (1997a: 1543) it "is the most fundamental usability method and is in some sense irreplaceable, since it provides direct information about how people use computers and what their exact problems are with the concrete interface being tested." There are various methods for user testing. One of the most popular and most effective methods is the laboratory test with the thinking aloud method (Nielsen 1993: 195) which was used in our case study. The advantage of user-focused evaluation is that the tests supply a huge amount of qualitative data that show how actual users handle the product. The disadvantages are that the tests take place in a laboratory situation and that a lot of equipment and coordination is necessary to conduct the test, making it labor-intensive.

3. Description of the Usability Study

3. 1 The Process

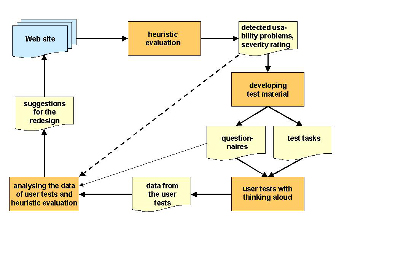

In cooperation with the Saarland Museum - Stiftung Saarländischer Kulturbesitz, the art museum of the federal state of Saarland, the Department of Information Science at the University of Saarland evaluated the museum's Web site (http://www.saarlandmuseum.de). The site is a graphically designed Web site of the third generation (Siegel, 1997) and went online in summer 1999. The evaluation project was carried out by sixteen graduate students who had received training in usability engineering in a research class, and two lecturers as coordinators. The study had two aims. The first aim was to evaluate some evaluation methods, especially the Heuristics for Web Communication; the second was to improve the usability of the Web site of the Saarland Museum. Therefore we decided to use a combination of heuristic evaluation and user testing as suggested in the research literature. Figure 1 illustrates the process.

Figure 1: The evaluation process of the usability study

3.2 Heuristic Evaluation With the Heuristics for Web Communication

The first step was an heuristic evaluation. As mentioned above, there is a multitude of heuristics. Heuristics can be specific for a certain domain or generally applicable. They can be design-oriented or evaluation-oriented or both. They can be based on research or on experience of practitioners. Therefore, heuristics vary in extent and quality. In our case study we used the Heuristics for Web Communication, developed by the faculty of the Departments of Technical Communication of the University of Washington, Seattle, and the University of Twente, the Netherlands. The heuristics are based on research findings in technical writing and cognitive psychology and were evaluated in a workshop with 40 participants, both students and lecturers of technical communication, and professional Web developers from various Web design companies in the Seattle area. The heuristics were revised according to the feedback of the participants of the workshop and the Web developers and were published in a special issue of the Journal of Technical Communication in August 2000.

The Heuristics for Web Communication consist of five different heuristics. The five heuristics deal with all important aspects of Web sites: displaying information, navigation, text comprehension, role playing (i.e. author-reader relationship), and data collection for analyzing interaction. The content of the heuristics can be summed up as follows:

The heuristic Displaying information on the Web consists of guidelines for visuals, e.g.

- how to design and arrange display elements,

- how to ensure that text is readable

- how to use pictures, illustrations, icons and motion.

The Heuristic for Web Navigation deals with hypertext theory and offers guidelines for navigation and orientation, e.g.

- how to design orientation information on each page,

- how to coordinate navigation devices,

- how to design site-level orientation information.

The heuristic Text Comprehension and the Web: Heuristics for Writing Understandable Web Pages focuses on text comprehension and issues of text quality, e.g.

- how to select, design, and organize content,

- what style to use,

- what makes Web pages credible and trustworthy.

The heuristic Role Playing on the Web discusses the typical rhetorical roles of the implied author and reader of the Web pages and their rhetorical roles, e.g.

- how rhetoric is used to describe author roles and reader roles, and

- what kind of relationship exists between author roles and reader roles.

The heuristics Web Data Collection for Analyzing and Interacting with Your Users focuses on analyzing the audience of a Web site and building a relationship either between you and your users or among the users themselves, using for example

- server log data for analyzing the use of Web pages and their audience, and

- means to build a relationship and create a sense of community with the audience.

The four content-oriented heuristics (the heuristics on Web Data Collection was not applied due to access restrictions to log files) were applied according to Kantner & Rosenbaum (1997: 155). The graduate students, who had received an introduction to the heuristics, worked in teams of four. Each team conducted an evaluation of the same selected number of pages from the chosen Web site. In a two-hour session of individual evaluation, the team members applied one of the four heuristics to the Web site. During the evaluation process they took notes of usability problems according to the various points listed in the heuristics. Then the team members gathered and discussed their findings. The usability problems detected in the heuristic evaluation were graded in a severity rating according to Nielsen (1997b) by each team. The rating ranged from 0 (no usability problem) to 4 (usability catastrophe) (cf. Table 1) and was conducted with respect to the frequency and persistence of the problems and the impact they have on users. At the end of the evaluation, the four teams presented their findings in a plenary meeting. The evaluation process took about five hours.

Table 1: Severity rating according to Nielsen

|

0 |

I don't agree that this is a usability problem at all |

|

1 |

Cosmetic problem only: need not be fixed unless extra time is available on project |

|

2 |

Minor usability problem: fixing this should be given low priority |

|

3 |

Major usability problem: important to fix, so should be given high priority |

|

4 |

Usability catastrophe: imperative to fix this before product can be released |

The most frequent usability problems were navigational and orientation problems as described in the Heuristic for Web Navigation, followed by general design problems as named in the heuristic Displaying information on the Web. After the rating the two lecturers collected the written findings of the team members and compiled a list of all usability problems for a re-design of the Web site. The findings were also used to design tasks for a user test in the laboratory.

3.3 User Testing in the Usability Laboratory With The Thinking Aloud Method

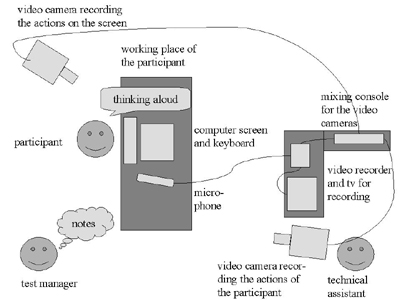

The next step of the evaluation was a user test in the usability laboratory. Figure 2 shows a sketch of the laboratory in which the tests took place.

Figure 2: A sketch of the usability lab

In the lab, real users have to work on tasks while thinking aloud, i.e. they verbalize their thoughts and comment on their actions while they handle the computer. This "allows a very direct understanding of what parts of the dialogue cause the most problems" (Nielsen 1993: 195). During the test, users work on standardized test tasks and are supervised by a test manager. The tests are recorded on video by a technical assistant who operates two video cameras. One of the cameras is focused on the face and hands of the participant, the other one on the computer screen. The recordings of the two cameras are blended together on one screen and recorded on video. In order to catch the details of interaction, a digital screencam records the actions on the screen. In a labor-intensive process, the findings of the tests are transcribed and categorized.

As we evaluated an art museum Web site, recruiting participants with an interest in art was suggested. Therefore we asked students of the Arts and Science department and art teachers to participate in our experiments. The teachers were chosen to increase the average age. The number of participants was decided according to Virzi (1992: 468), who suggests at least 15 participants. In our study, 17 users participated. Five of them were teachers; 12 were students of the Arts and Science department. Seven participants were male, ten female. The youngest user was 19, the oldest 48, the average age being 27.

It takes some time and effort to design the test task scenario for the user test of a large informational Web site (cf. Kantner & Rosenbaum 1997: 154). The test tasks should be as representative as possible of the use to which the system will be put in the field and at the same time small enough to be completed in a reasonable time frame, but not so small as to be trivial (Nielsen 1993: 185f). The test scenario, which had been discussed with the client of our case study, consisted of nine tasks that represented potential usability problems. Table 2 shows a selection of the potential problems and the test tasks.

Table 2: A selection from the test tasks of the user tests

|

Potential Usability Problem |

Selection of the test tasks (abbreviated) |

|

Links are hidden in graphical design (images). |

1) Go from the splash screen to the core page. |

|

Insufficiently linked information, the exhibition is not linked to the opening hours. |

3) Look for the opening hours of a future exhibition. |

|

Insufficiently linked information, the exhibition is not linked to the service section where tours are offered. |

4) Look for guided tours to the current exhibition. |

|

All pages are titled the same. There are no individual title-tags on the different pages. |

7) Use bookmarks to go back to certain page. |

The user tests revealed that all assumed problems were usability problems for test users. The findings were grouped into several categories, e.g. problems handling the splash screen (task 1), insufficient use of links between related information (tasks 3 and 4) etc., and illustrated by lively quotes taken from the test protocols. For example, after finishing task 1 a participant stated: "The first screen only shows a headline, a picture and an address but no link. (break) I click on the picture. It works!" Another remarked: "As an Internet beginner I honestly have a problem to get to the next page. I'm a little helpless because I prefer big arrows and buttons that say 'next page.' But I made it." While struggling with task 4, the patience of a participant snapped and he said: "Now I would try to get in touch with someone and write an email, if they offer a guided tour, because all the searching takes far too long." Trying to use the bookmarks in task 7, a participant said: "Usually I would use the bookmarks. (clicks on bookmarks) Well, now I see that all the bookmarks have the same name." Another participant used sarcasm: "That's really funny, because I have five bookmarks all named Saarland Museum Saarbrücken. That helps a lot. Great! (laughs)."

Quotes like these make usability problems come alive and show the reactions of actual users in real situations. From our experience, these reactions are much more convincing than dry statements of experts no matter how profound these statements might be and no matter on what kind of theory they are based.

The test time and performance of the users was influenced by their computer literacy, measured by data collected in a questionnaire. The average test time was about 20 minutes and corresponds with the expected test time derived from three pre-tests. The duration of the test shows some connection between the computer literacy level and the amount of time needed to complete the tasks. The shortest test of 14 minutes was conducted with a user who had used the Web for two years or longer and several times a week. The longest test of 30 minutes was conducted with a participant with little computer literacy. Further analysis did not seem necessary to us because the duration of a test is also influenced by other factors like interest in the subject and the medium and the method of thinking aloud.

4. Practical Experiences With the Different Methods

In our case study the theoretical foundations of the heuristic evaluation were the Heuristics for Web Communication. At the time of our evaluation, these heuristics were brand new. Only a little practical experience existed in applying these heuristics. Therefore we thought it useful to contrast the Heuristics for Web Communication with another heuristic evaluation tool, Keevil's Usability Index. According to Keevil (1998: 271), the usability index is a "measure, expressed as a per cent, of how closely the features of a Web site match generally accepted usability guidelines." The Usability Index consists of five categories (Keevil 1998: 273):

- Finding the information: Can you find the information you want?

- Understanding the information: After you find the information, can you understand it?

- Supporting user tasks: Does the information help you perform a task?

- Evaluating the technical accuracy: Is the technical information complete?

- Presenting the information: Does the information look like a quality product?

Judging from the 203 questions, Keevil's Usability Index seems focused on commercial Web sites. But it seemed adequate to use it for the Web site of a cultural heritage institution, because Keevil (1998: 275) points out that the Usability Index is generally applicable: "Information Developers can use the checklist to measure how easy it is to find, understand, and use information displayed on a Web site."

In our case study, fifteen students (one of the sixteen didn't hand in the index) used the Usability Index to evaluate the Web site of the Saarland Museum. The following table (Table 3) shows the results with the categories 'N/A' (not applicable), 'Yes', 'No', the sum and the usability in percent, which is calculated from the total number of 'Yes' answers divided by the total numbers of 'Yes' and 'No' answers.

Table 3: Results of Keevil's Usability Index for the Saarland Museum Web site

|

Usability Index |

N/A |

Yes |

No |

Sum |

Usability |

|

Evaluator 1 |

96 |

57 |

47 |

200 |

55 % |

|

Evaluator 2 |

134 |

19 |

47 |

200 |

29 % |

|

Evaluator 3 |

71 |

67 |

62 |

200 |

52 % |

|

Evaluator 4 |

71 |

67 |

62 |

200 |

52 % |

|

Evaluator 5 |

81 |

51 |

68 |

200 |

43 % |

|

Evaluator 6 |

62 |

69 |

69 |

200 |

50 % |

|

Evaluator 7 |

96 |

48 |

53 |

197 |

48 % |

|

Evaluator 8 |

64 |

61 |

74 |

199 |

45 % |

|

Evaluator 9 |

77 |

60 |

62 |

199 |

49 % |

|

Evaluator 10 |

77 |

60 |

62 |

199 |

49 % |

|

Evaluator 11 |

77 |

60 |

62 |

199 |

49 % |

|

Evaluator 12 |

81 |

61 |

58 |

200 |

51 % |

|

Evaluator 13 |

66 |

57 |

53 |

176 |

52 % |

|

Evaluator 14 |

69 |

53 |

81 |

203 |

40 % |

|

Evaluator 15 |

88 |

40 |

72 |

200 |

36 % |

Table 3 shows a wide range of usability for the Saarland Museum expressed in per cent. The results reached from 29% to 55%, the arithmetic mean being 47%. The deviation in the percentage of usability is remarkable. It is due to the bias of the evaluators in interpreting the questions. One reason is that certain sets of questions from the Usability Index did not fit for the Web site under evaluation. In this case, some evaluators chose to vote for 'N/A', while others voted for 'No'. This explains the big differences between the 'N/A' votes and the 'No' votes and in the overall usability because the usability is calculated from the total number of yes answers divided by the total numbers of yes and no answers. Another reason is that the checklist, like every checklist, is open to interpretation. Keevil (1998: 275) was aware of this problem and tried to reduce it by only allowing the answers 'N/A', 'Yes', and 'No'. But still, there is considerable room for interpretation. Apart from the problem of interpretation, the Usability Index has another disadvantage. It gives a number in per cent that indicates the usability and some hints on usability problems that can be derived from the 203 questions, but it hardly identifies concrete usability problems.

The identification of concrete usability problems and suggestions on how to improve usability are the practical advantages of the Heuristics for Web Communication. The heuristics are not simply checklists that can be answered by 'N/A', 'Yes', and 'No'. They are guidelines designed as statements and questions that guide the evaluator to identify concrete usability problems by asking guiding questions like, "Can you decipher all of the elements in the display easily? If not, consider making them larger," or "Which of the organization's values should be emphasized?" or giving hints like, "Make sure the most important links appear high enough on the page to be visible without scrolling, regardless of the resolution of the user's monitor. When pages must scroll, provide visual cues to encourage users to scroll down to links that are below the scroll line." By contrasting these established usability principles with the Web site under evaluation, the evaluator or information designer can decide if usability problems exist, what kind they are, and how they can be removed. This is the big advantage of the heuristics.

The disadvantage of the Heuristics for Web Communication is that they are very detailed and complicated compared with general heuristics like the ones of Molich & Nielsen. Molich & Nielsen suggest nine basic items of usability (Molich & Nielsen 1990: 338) (Table 4).

Table 4: Molich & Nielsen's nine basic items of usability

|

1 |

Use simple and natural language |

|

2 |

Speak the user's language |

|

3 |

Minimize the user's memory load |

|

4 |

Be consistent |

|

5 |

Provide feedback |

|

6 |

Provide clearly marked exits |

|

7 |

Provide shortcuts |

|

8 |

Provide helpful error messages |

|

9 |

Prevent errors |

Although it is possible to do a successful evaluation with these nine basic items of usability, evaluators might need more guidance in the evaluation process, as is offered by the Heuristics for Web Communication. These heuristics support the evaluators by providing a structured "guided tour" for the evaluation process that takes into account both the big picture and important details. They help the evaluators to consider all substantial usability issues and to focus on the important points. Moreover they generate a profound impression of the overall quality of a Web site. This makes the Heuristics for Web Communication a valuable tool for Web usability engineering.

The four content-oriented heuristics (the fifth heuristic was not applied due to access restrictions to log files) are very different as far as their ease of application and the level of background knowledge are concerned. We found that the heuristic Displaying information on the Web, the Heuristic for Web Navigation, and the heuristic Text Comprehension and the Web can be successfully applied if the evaluators have an average level of knowledge in information design and Web design. The evaluators in our case study, all graduate students of information science, had no difficulties in applying them. The heuristic Role Playing on the Web requires some special knowledge of hypertext theory as it is based on the quite complicated author-reader relationship in hypertext (Michalak & Coney 1993). Although it is very interesting and provides promising results, the evaluators in our case study had some difficulties in applying it.

As expected from the research literature (Nielsen 1992: 378f), the heuristics detected a great number of so-called minor usability problems- no disadvantage at all because user testing is not an adequate means to identify such minor problems. Minor problems were, for example, inconsistent use of link colors, no text messages for graphic links, complicated sentences, deficits in page structure and organization, lack of informative titles, meaningless animation, flaws in the author-reader relationship, etc. Although real usability problems, such minor problems are not observable in user testing, because average users do not realize that these kinds of deficiencies cause problems because they lack the background knowledge in information design and Web design.

The user tests in the usability lab were very labor-intensive for several reasons: the technical equipment had to be arranged, the test scenario had to be designed and tested, participants had to be recruited, and tests had to be conducted with two experimenters who had to be present all the time to supervise the participants and the technical equipment. The analysis and evaluation of the test data was also time-consuming because the data had to be transcribed and categorized. The big advantage of this method was that the recordings, especially the screencam files, showed cursor movements that help to identify problems in navigation and orientation. This is especially helpful when discussing the findings and suggestions for the redesign with the client. A simplified method of thinking aloud testing, in which the experimenters simply take notes of their observations, is less labor-intensive than videotaping and transcribing the tests. But from our experience, it is difficult for one or two experimenters to follow the course of the test and take notes at the same time if the test consists of more than some basic functions. Therefore video taping or screencam recording is essential. An alternative to transcribing whole test sessions would be to transcribe only the most important sequences of a test.

An important point we noticed when comparing answers in the questionnaires with courses of the tests is that answers about the test experience are often not very reliable. The answers about the satisfaction with the Web site often did not correspond with the actual experience of the participants who were observed during the test. For example, several participants stated that they had no problems with navigation and orientation although they had had serious problems during the test. The reasons for this gap between behaviour and statements cannot be discussed here. From our experience it is important to remark that a questionnaire alone cannot provide reliable results. This is not new but confirms the phenomenon that impressions of their own behaviour and the behaviour in the situation of social reality show a certain deviation. Despite this fact, from our experience questionnaires or interviews are necessary to give the participants the opportunity to comment on the course of the test. Test users appreciate this opportunity, and the results can be used to derive additional information about the acceptance of the Web site.

5. Conclusion

The evaluation method used in usability engineering depends on the subject that is evaluated and the goals of the evaluation. Although the combination of heuristic evaluation and user testing provides good results, it is costly as far as time and resources are concerned. With respect to the cost-benefit ration, in many cases the heuristic evaluation is sufficient to detect a reasonable number of minor and major usability problems.

In our case study, the Heuristics for Web Communication proved to be applicable tools for heuristic evaluation. The heuristics support a structured evaluation and help both to find and to solve usability problems. In contrast to checklists, they give the evaluators some scope for interpretation while offering guidance at the same time. The drawback of the heuristics is that they cannot be successfully applied by novice evaluators. The evaluators need some background knowledge in Web design and evaluation. The heuristics were helpful in pointing out critical points in the Web site that were evaluated in the user test. Compared to user testing, the heuristic evaluation was less labor-intensive. Nevertheless, user testing is a very valuable tool for usability engineering because actual users give an impression of how the Web site will be used in practice. Moreover, actual users might have problems with features that experts do not realize are problematic. The focus on the actual users and the vivid and expressive statements they give justifies the much higher expense in certain cases. From our experience, the combination of both heuristic evaluation with the Heuristics for Web Communication and user testing with thinking aloud is a very useful method of usability engineering.

References

Cleary, Y. (2000): An Examination of the Impact of Subjective Cultural Issues on the Usability of a Localized Web Site - The Louvre Museum Web Site. In: Museums and the Web 2000: Conference Proceedings. CD-ROM documenting the Forth International Conference held in Minneapolis, April 17-19, 2000. Pittsburgh, PA: Archives & Museum Informatics.

Garzotto, F. / Matera, M. / Paolini, P. (1998): To Use or Not to Use? Evaluating Usability of Museum Web Sites. In: Museums and the Web 1998: Conference Proceedings. CD-ROM documenting the Second International Conference held in Toronto, Ontario, Canada, April 21-25, 1998. Pittsburgh, PA: Archives & Museum Informatics.

Heuristics for Web Communication. Special Issue of the Journal of Technical Communication, 47 (3) August 2000.

Harms, I. / Schweibenz, W. (2000): Usability Engineering Methods for the Web. Results From a Usability Study. In: Informationskompetenz - Basiskompetenz in der Informationsgesellschaft. Proceedings des 7. Internationalen Symposiums für Informationswissenschaft (ISI 2000) Dieburg 8.-10. November 2000. Hrsg. von G. Knorz und R. Kuhlen. (Schriften zur Informationswissenschaft 38). Konstanz: UKV. 17-30.

Kantner, L. / Rosenbaum, S. (1997): Usability Studies of WWW Sites: Heuristic Evaluation vs. Laboratory Testing. In: Proceedings of the 15th International Conference on Computer Documentation SIGDOC '97: Crossroads in Communication. 19-22 October 1997, Snowbird, UT. New York, NY: ACM Press. 153-160.

Keevil, B. (1998): Measuring the Usability Index of Your Web Site. In: Proceedings of the ComputerHumanInteraction CHI '98 Conference, April 18-23 1998, Los Angeles, CA. New York, NY: ACM Press. 271-277. Also available online: Internet, URL http://www3.sympatico.ca/bkeevil/sigdoc98/index.html. Version: 09/98. Consulted: 12/06/00.

Krömker, H. (1999): Die Welt der Benutzerfreundlichkeit. [The World of Userfriendliness.] In: Hennig, J. / Tjarks-Sobhani, M. (Hrsg.): Verständlichkeit und Nutzungsfreundlichkeit von technischer Dokumentation. (tekom Schriften zur technischen Kommunikation Bd. 1) Lübeck: Schmidt-Römhild. 22-33.

Levi, M. D. / Conrad, F. G. (1996): A Heuristic Evaluation of a World Wide Web Prototype. In: interactions, 07/1996: 51-61.

Michalak, S. / Coney, M. (1993): Hypertext and the Author/Reader Dialogue. In: Proceedings of Hypertext '93, November 14-18 1993, Seattle, WA. New York, NY: ACM. 174-182.

Molich, R. / Nielsen, J. (1990): Improving A Human-computer Dialogue. In: Communications of the ACM, 33 (3) 1990: 338-348.

Nielsen, J. (1993): Usability Engineering. Boston: Academic Press.

Nielsen, J. (1997a): Usability Testing. In: Salvendy, G. (ed.): Handbook of Human Factors and Ergonomics. 2nd edition. New York, NY: John Wiley & Sons. 1543-1568.

Nielsen, J. (1997b): Severity Ratings for Usability Problems. Internet, URL http://www.useit.com/papers/heuristic/severityrating.html. Version: 01/11/99. Consulted: 12/06/00.

Rajani, R. / Rosenberg, D. (1999): Usable?...Or not?...Factors Affecting the Usability of Web Sites. In: CMC Computer-Mediated Communication Magazine. Internet, URL http://www.december.com/cmc/mag/1999/jan/rakros.html. Version: 01/99. Consulted: 12/06/00.

Siegel, D. (1997): Creating Killer Web Sites. The Art of Third-generation Site Design. Indianapolis, IN: Hayden.

Silverstone, R. (1988): Museums and the Media: A Theoretical and Methodological Exploration. In: International Journal of Museum Management and Curatorship, Vol. 7, No. 3, 1988: 231-241.

Schweibenz, W. (1998): The "Virtual Museum": New Perspectives For Museums to Present Objects and Information Using the Internet as a Knowledge Base and Communication System. In: Knowledge Management und Kommunikationssysteme. Workflow Management, Multimedia, Knowledge Transfer. Proceedings des 6. Internationalen Symposiums für Informationswissenschaft (ISI '98) Prag, 3.-7. November 1998. Hrsg. von H. H. Zimmermann und V. Schramm, V. (Schriften zur Informationswissenschaft 34). Konstanz: UKV. 185-200. Also available online: Internet, URL

http://www.phil.uni-sb.de/fr/infowiss/projekte/virtualmuseum/virtual_museum_ISI98.htm. Version: 11/05/98. Consulted: 12/06/00.

Teather, L. / Wilhelm, K. (1999): "Web Musing": Evaluating Museums on the Web from Learning Theory to Methodology. In: Museums and the Web 1999: Conference Proceedings. CD-ROM documenting the Third International Conference held in New Orleans, LA, March 11-14, 1999. Pittsburgh, PA: Archives & Museum Informatics.

Virzi, R. A. (1992): Refining the Test Phase of Usability Evaluation: How Many Subjects Is Enough? In: Human Factors, 34 (4) 1992: 457-468.